Research

I’m currently concentrating on advancing the core technologies behind the LocalLLM system. Last year, when I was exploring multimodal LLMs for image recognition, I couldn’t observe performance that rivaled supervised learning. That line of investigation was put on hold. However, with the emergence of ideas like test-time computing and prompt optimization, the concept of LLM as edge AI has become appealing once again.

Specifically, for test-time computing, it has become a central topic in our lab’s thesis reading group. I’ve been deepening my understanding by reading the selected papers and listening to my lab mates’ explanations. The situation is a bit of a treasure hunt, though—since OpenAI’s O1 has kept its implementation techniques secret, many methods have been proposed under the banner of test-time computing, and there isn’t a single “best” way. Some models are already trained to perform deep reasoning (i.e., engage in test-time computing), while others add extra modules to verify and refine outputs.

Regarding prompt optimization, I’m particularly intrigued by data-driven optimization. I’m currently exploring methods to optimize both the prompt templates and the demonstrations (few-shot examples). (Some notable approaches include OPRO, MIPRO, TextGrad, DSPy, etc.) One concern is that for deep-reasoning models like O1 or R1, the optimal prompt might differ significantly from those used in previous paradigms—the simpler the better. I’m still investigating this. Prompt optimization is critical not just for LocalLLM but also for developing agents that are gaining traction. Unlike chat applications, where prompts are one-time use and variable, agents typically use a fixed prompt template (aside from variables), which can significantly impact performance.

On a related note, I’ve also been experimenting with offline-first databases like Turso and Electric SQL for system development.

Life

I’m making the most of my surroundings and cherishing time with friends. Since the US presidential inauguration on January 20th, executive orders have been coming at an incredible pace. With mass deportations starting, even Google Maps for the US is reportedly relabeling the Gulf of Mexico to the “Gulf of America”—it feels like something out of a surreal novel.

More recently, Deepseek has been making headlines. It’s drawing attention not only in computer science circles but also among friends from other fields. A Chinese friend mentioned that some people on social media are stirring up nationalism (with slogans like “China is #1”), which he isn’t happy about (he really dislikes China’s autocratic regime). Meanwhile, as seen in the LA wildfire news, the Bay Area has experienced significantly less rain than last year. And due to an egg shortage—Trader Joe’s nearby is out of eggs, and when I finally found a pack (12 eggs) at Safeway, it was priced at $10 (apparently because over 100 million birds have been culled due to avian influenza).

1. Research Activities

Tue: Thesis Reading Group @Lab

bi-Thu: Lunch Meeting @Lab

# AI Meetup

1/16 Tooluse @AmazonSF

1/23 Fullstack OSS AI @AmazonSF

1/28 LLMOps @GoogleHQI’m researching data-driven prompt optimization and test-time computing (agentic refining).

Taking advantage of the local scene, I attended several free AI meetups to gather information and expand my network. Currently, Vercel’s AI SDK is meeting my needs, but I’m keeping an eye on Toolhouse, a managed service for building AI agents that comes with a wealth of built-in tools. The Fullstack OSS AI meetup was particularly interesting—it focused on tips for achieving high performance in self-hosted OSS LLMs (including core topics like GPU implementations), which was academically enriching.

Deepseek

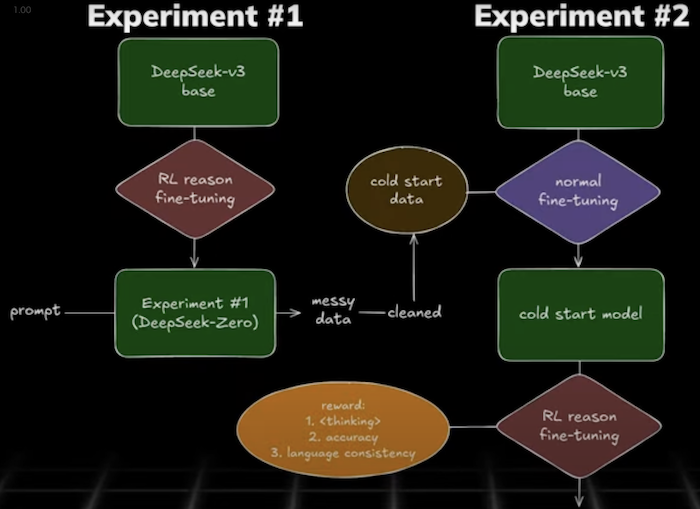

- A Chinese startup has garnered attention by achieving high performance in LLMs at a very low cost.

- V3 is the base model LLM that has significantly improved efficiency by incorporating features like KV Caching and Mixture of Experts (MoE).

- R1-zero tuned V3 using a reinforcement learning method called GTPO to enable deep reasoning, which quickly became the talk of the community.

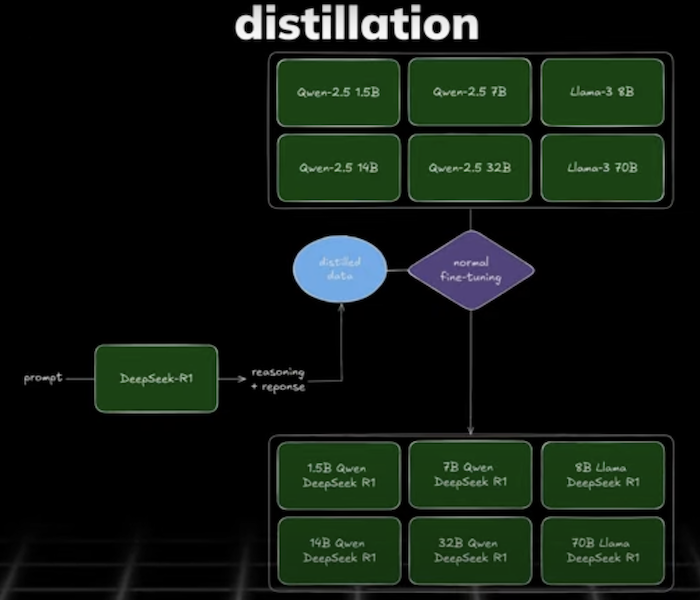

- The latest R1 model builds on R1-zero by manually refining its outputs, then fine-tuning V3 with supervised fine-tuning (SFT) and further reinforcement learning to improve the readability of deep-reasoning outputs. While the model architecture and weights have been made open-source, the training code remains private, prompting deeper analysis within the open community. I’ll be watching this space closely to deepen my understanding.

- Despite all the hype in the market, the consensus seems to be that investment in NVIDIA GPUs is not waning.

- TinyZero: open r1-zero

- open-r1 *(already with plenty of stars)

- Dario’s general explanation

Other Notable Releases at CES 2025

- NVIDIA Physical AI, AI Cosmos Platform

- NVIDIA AI Blueprints: Customizable reference applications to speed up the development of agentic and generative AI

- NVIDIA Project DIGITS: A powerful desktop AI supercomputer priced at $3,000

illuminate is also proving to be very useful:

- While generating podcasts with notebook LLMs has become popular, using illuminate to read arXiv papers is incredibly convenient.

- It has become part of my routine to listen to digest podcasts before diving deep into papers or starting our journal clubs.

2. Life Activities

1/1 Sushi Party

1/4 DeYoung Museum, Presidio

1/12 Hike & Costco

1/20 BBQ Party

1/26 Monterey Aquarium

# Stanford Event

1/11 Volleyball Match

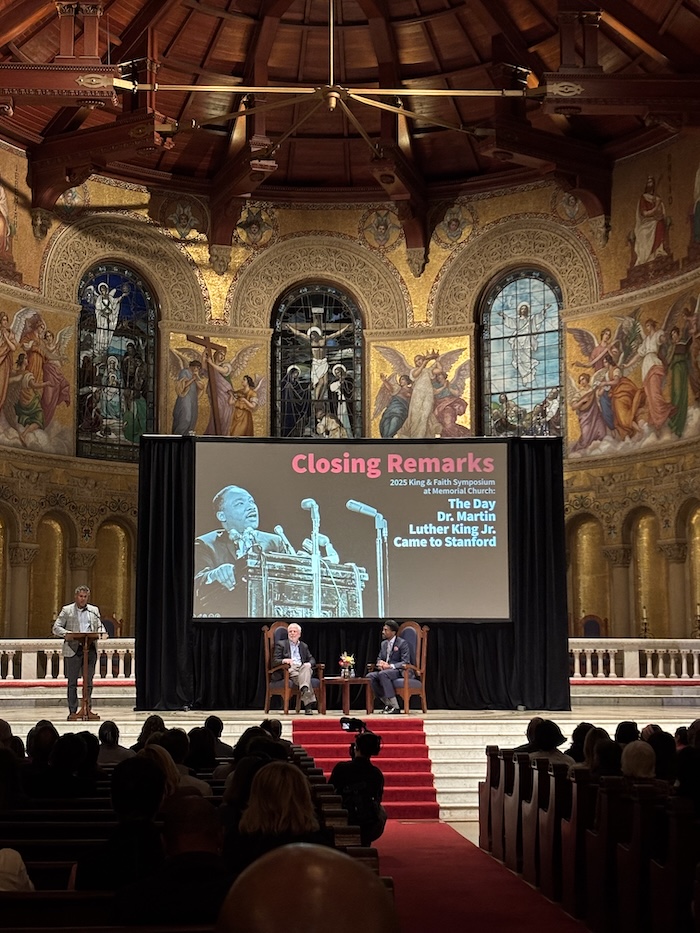

1/15 MLK Celebration

1/15 Political Union Seminar

1/18 Gymnastics Match

1/19 Wrestling Match

1/22 Seminar "Where is God in Gaze"

1/24 Global Chef DinnerTom’s sushi was authentically prepared and absolutely delicious.

For the third time, I visited Monterey Aquarium (I got a $65 ticket courtesy of a friend who is a marine researcher). Although it’s hailed as the world’s best aquarium, I found it to be just so-so—what really caught my interest was the historical context of Cannery Row. (The photo on the right is with an engineer from Yemen I met at AmazonSF.)

I’ve been actively participating in Stanford events to fully leverage my environment. The sports club teams, especially the gymnastics team, provided some high-level performances.